Statistical Fallacies and Biases in Clinical Research

When Numbers Deceive and Minds Comply: Recognizing and Resisting the Illusion of Causality

Introduction

Clinical research is not immune to cognitive illusions. While statistics may offer a veneer of objectivity, they are often interpreted through the fog of human bias. This lesson confronts the subtle, but potent, errors in reasoning that pervade clinical science and practice, with particular focus on causal illusions: false beliefs in causal relationships arising from flawed study design, data interpretation, or human judgment.

We now bring the series full circle: from introducing p-values and confidence intervals to confronting the human tendencies that persistently mislead us, even when the statistics seem sound. These are not just “errors in math”, they are errors in meaning.

The Spectrum of Study Designs: Control vs. Context

Before we explore specific fallacies, it’s important to zoom out and consider the landscape in which they occur. Not all clinical studies are built the same. And crucially, not all are equally vulnerable to bias, or equally capable of reflecting the complexity of real-world care.

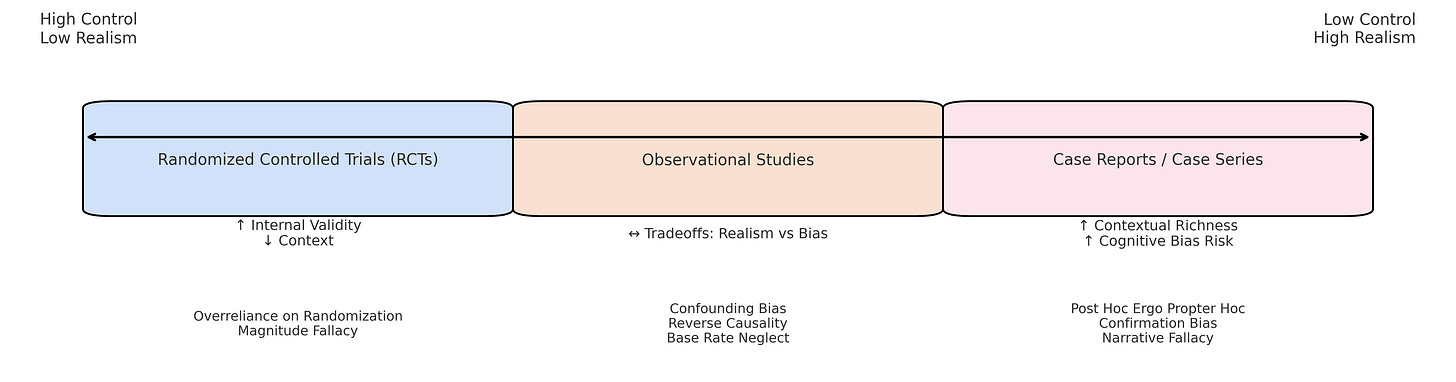

There’s a spectrum of clinical study designs that stretches from highly controlled to deeply contextual:

At one end, Randomized Controlled Trials (RCTs) aim to minimize bias through strict randomization, blinding, and control. They’re engineered for causal clarity, but often sacrifice ecological validity. These studies tell us if something can work under ideal and very closed (isolated) conditions. As such they require lots of assumptions when entering, such as applying results, in (to) an open (real) system context.

In the middle are observational studies, where data is collected from real-world settings without intervention. They offer richer context. More variation, comorbidities, and practice patterns, but invite more ambiguity. Without randomization, we must wrestle with confounding, selection bias, and the illusion of control.

At the other end lie case reports and case series. These are the raw stories of clinical life. Narrative-rich, emotionally salient, and highly specific. They capture what it’s like to be a practitioner or a patient. But they are also the natural habitat of cognitive bias: they are especially vulnerable to post hoc reasoning, confirmation bias, and causal coherence illusions.

Here’s the core lesson:

As we move from RCTs to case reports, we gain context but lose control. We gain realism, but we invite fallacy.

None of these designs is “wrong.” Each serves a purpose. But each amplifies different risks, and those risks must be understood if we are to interpret findings with epistemic care.

This spectrum is not just methodological. It mirrors the tension between two core values in evidence-based practice:

The desire for causal clarity, and

The need for contextual relevance.

It is precisely in this tension that many statistical and cognitive fallacies thrive.

The Illusion of Causality: Three Faces of the Fallacy

The illusion of causality does not arise from thin air. It emerges from the structure of our research, the way we interpret data, and the stories we tell ourselves and others. Each of these illusions is shaped and magnified differently across the spectrum of study designs.

Illusions Embedded in Study Design

Some illusions are baked into the very architecture of research. When a study appears to show a treatment works, its design might silently introduce bias, especially if the controls for confounding or temporal sequence are weak.

These illusions are more likely to surface as we move away from randomized control and toward more naturalistic or open-system research designs:

Post Hoc Ergo Propter Hoc

Mistaking temporal succession for causation, especially in before/after studies or case series and definitely in clinical practice and patient stories.

Confounding Bias

Arises when a third factor explains both the “cause” and “effect.” This is especially problematic in observational studies lacking randomization, or when randomization doesn’t work well or there are other study design flaws.

Over reliance on Randomization

Assuming that randomization alone guarantees causal validity, ignoring the need for mechanistic understanding or consideration of external validity. This fallacy can obscure contextual or biological complexity even in well-conducted RCTs. “Statistical associations in RCTs often lack causal depth. A treatment may appear effective on average, but without investigating underlying mechanisms, we risk mistaking correlation for causation.” Anjum et al., Rethinking Causality

Illusions Arising in Interpretation

Even when studies are well-designed, they can be misread or misrepresented. These interpretive fallacies often stem not from the data itself, but from how we frame or consume it. This is particularly true under pressure to translate findings into clinical action.

These are common in observational studies and RCTs alike, but become more acute when results are reduced to headlines, p-values, or overconfident summaries.

Magnitude Fallacy

Treating statistical significance as if it were clinical significance.

Base Rate Neglect

Ignoring the prior probability or incidence of an event when interpreting predictive values.

Reverse Causality

Misjudging the direction of cause and effect, a common trap in observational data where temporal relationships are unclear or unmeasured.

Personal Experience as a Source of Causal Belief

The most pervasive illusions arise not from published research, but from firsthand experience. These are the “felt truths” that clinicians and patients derive from their day-to-day encounters: emotionally resonant, cognitively sticky, and often misleading.

These illusions dominate at the case report and anecdotal level, where context is rich but control is absent.

“I did X and then Y happened, so X caused Y.”

“When I use this technique, patients feel better — so it must work.”

These are examples of personal induction, our natural tendency to extract causal meaning from co-occurrence, especially when outcomes are emotionally or socially reinforced.

These stories are powerful because they feel true, and because they’re rarely subject to structured scrutiny.

These illusions are structured patterns of reasoning that surface repeatedly across clinical studies and practice. Below, we organize them into statistical, cognitive, and narrative categories, each with characteristic vulnerabilities and strategies for resistance.

Core Fallacies and Biases in Clinical Research

Below we categorize fallacies and biases into three domains: statistical, cognitive, and narrative.

Statistical Fallacies

Multiple Comparisons Bias

What it is: The risk of finding at least one statistically significant result purely by chance when multiple hypotheses are tested.

When to look for it: Any study reporting many outcomes, subgroup analyses, or testing multiple variables without adjusting p-values or correcting for false discovery.

How to guard against it:

Use correction procedures (e.g., Bonferroni, False Discovery Rate).

Pre-register primary outcomes.

Evaluate effect sizes, confidence intervals and consistency rather than isolated p-values.

Regression to the Mean

What it is: The statistical tendency for extreme values (high or low) to move closer to the average on subsequent measurement, independent of intervention.

When to look for it: Especially common in before-and-after studies, pilot trials, or case reports where patients are selected based on unusually severe symptoms.

How to guard against it:

Include a comparison group or randomized control.

Interpret single-subject or uncontrolled improvement cautiously.

Consider baseline variability when evaluating treatment effects.

Cognitive Biases

Confirmation Bias

What it is: The tendency to favor, search for, or interpret information in ways that confirm one’s existing beliefs or hypotheses.

When to look for it: In both research interpretation (e.g., cherry-picking supportive findings) and clinical reasoning (e.g., sticking with an initial diagnosis despite conflicting signs).

How to guard against it:

Actively seek disconfirming evidence.

Use structured diagnostic checklists or Bayesian updating.

Involve peers for second opinions or external critique.

Outcome Bias

What it is: The tendency to judge a decision by its eventual outcome rather than the quality of the decision-making process at the time.

When to look for it: Retrospective case reviews, audit meetings, or clinical debriefings, especially after bad outcomes.

How to guard against it:

Focus evaluations on the logic and evidence used at the time, not in hindsight.

Train teams to distinguish process quality from result.

Practice simulation-based decision-making with probabilistic outcomes.

Narrative-Based Errors

Causal Coherence Bias

What it is: The cognitive tendency to impose causal connections between events in a story to create a coherent narrative, even when those connections don’t exist.

When to look for it: In clinical case discussions, journalistic summaries of research, or patient storytelling — any setting where sequences are interpreted causally without sufficient evidence.

How to guard against it:

Use causal diagrams (e.g., DAGs) to differentiate correlation from causation.

Apply Hill’s criteria to evaluate causal claims.

Ask: What other explanations are consistent with this sequence?

Conviction Narrative Fallacy

What it is: The belief that a compelling, emotionally resonant story must be true, especially under conditions of uncertainty.

When to look for it: Under diagnostic uncertainty, in treatment decisions when mechanisms are unknown, or when charismatic experts offer anecdotal support.

How to guard against it:

Separate plausibility from empirical support.

Cross-check with structured evidence reviews or mechanistic models.

Ask: What evidence would falsify this story?

Why Do These Illusions Persist?

Psychological research shows that illusions of causality are not random. They are systematic, predictable, and hard to extinguish:

High Outcome Density (many positive outcomes) leads people to overestimate treatment efficacy.

Action Frequency can exaggerate perceived control: the more one intervenes, the more one sees improvement — regardless of actual effect.

Prior Beliefs act as filters, biasing what evidence is noticed and how it’s weighted.

“Illusory beliefs can block learning from evidence. This may explain why ineffective treatments remain popular in practice.” Matute et al., 2015

Integrating CR-GCMs to Recognize and Resist Fallacies

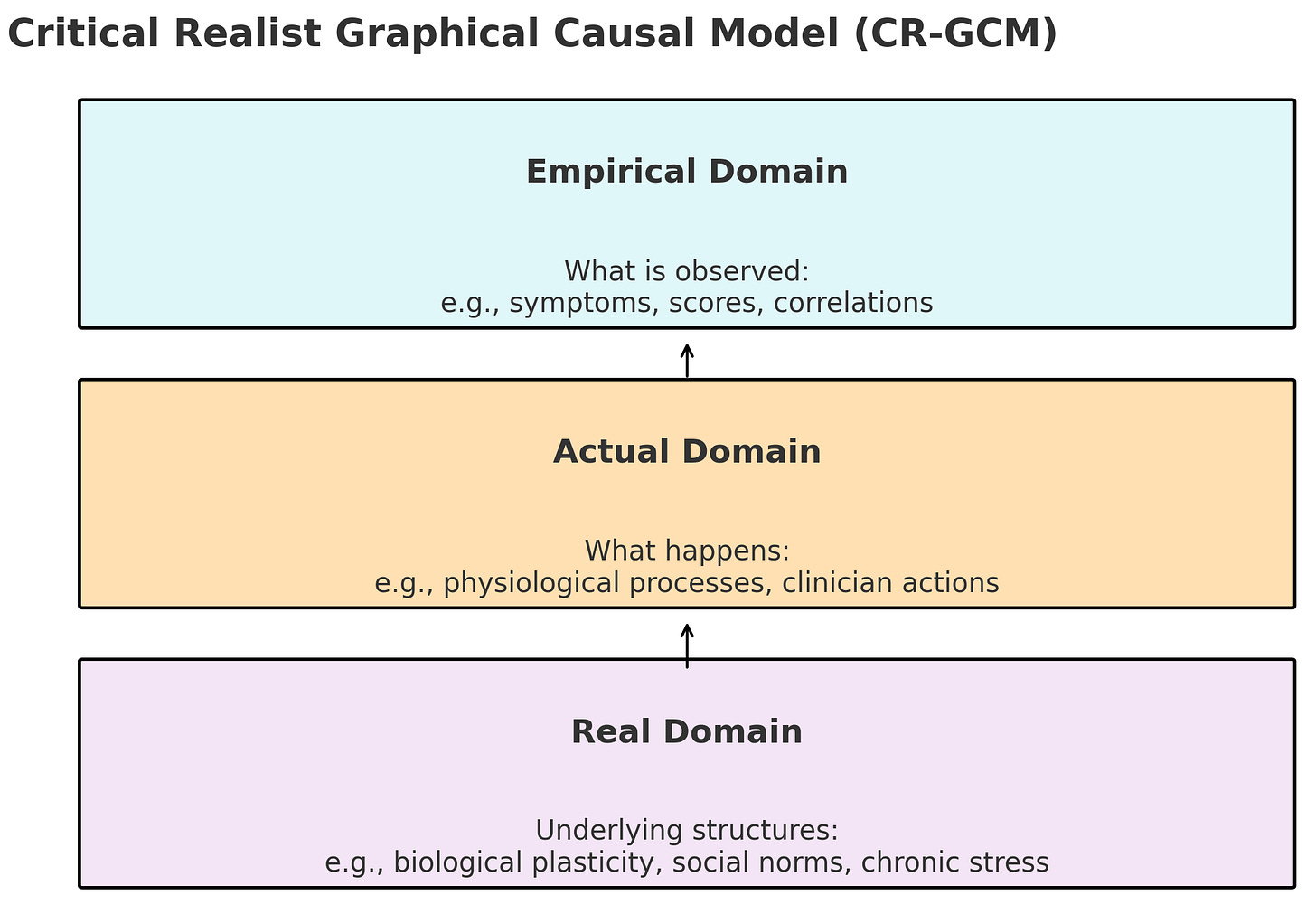

Critical Realist Graphical Causal Models (CR-GCMs) help identify where fallacies take root:

Empirical Domain: Where illusions are observed, e.g., improved pain scores after intervention.

Actual Domain: Where real mechanisms may (or may not) operate, e.g., neuromuscular adaptation.

Real Domain: Underlying causal structures, e.g., biological plasticity, social behavior norms, that may not be directly observed but exert real generative effects

By explicitly modeling unobserved mechanisms and structures, CR-GCMs move beyond surface correlations to causal depth, guarding against both statistical and narrative illusions.

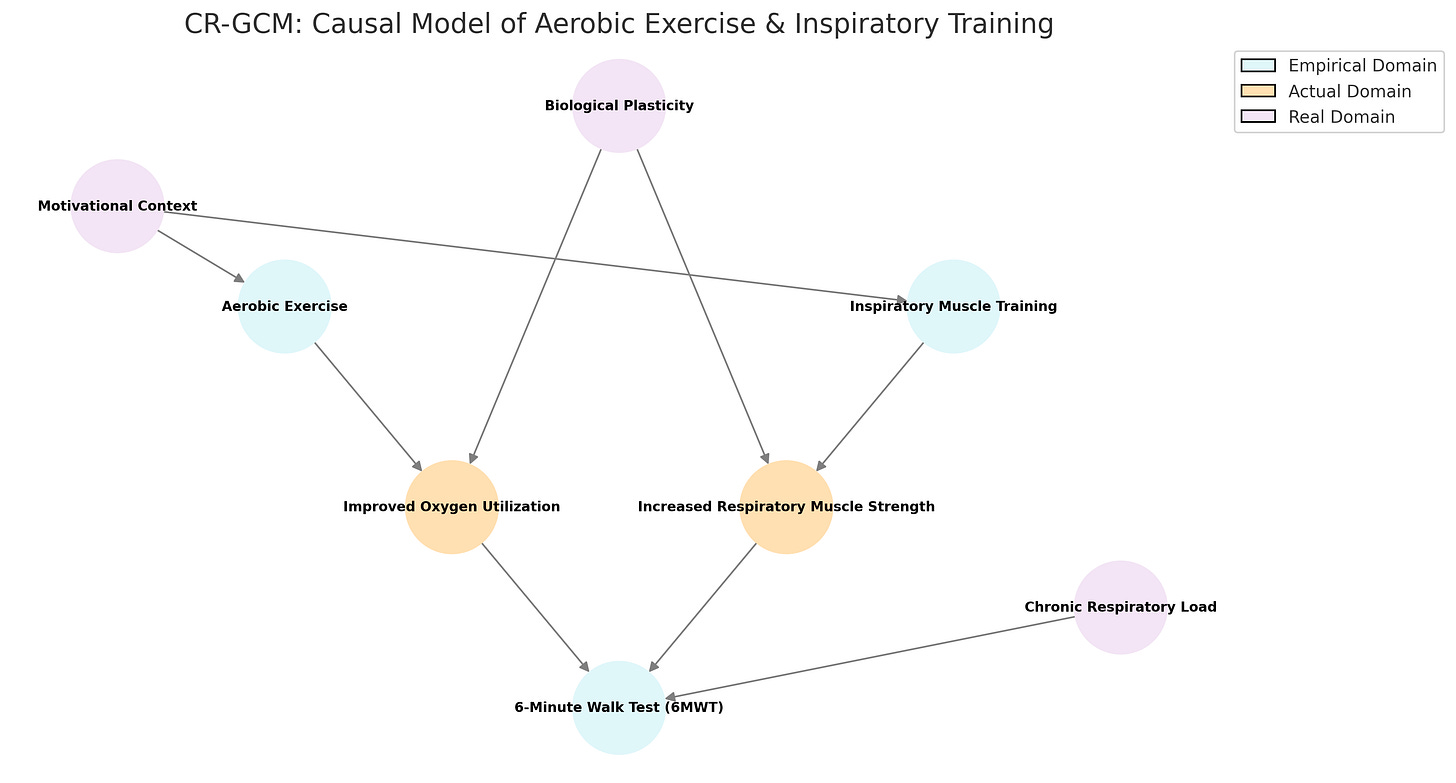

Here is a full Critical Realist Graphical Causal Model (CR-GCM) visualizing:

Empirical variables (what is observed and tested),

Actual-level processes (mechanisms mediating the effects),

Real-level structures (deep causal influences and conditions).

Study Example:

How Aerobic Exercise and Inspiratory Muscle Training affect the 6-Minute Walk Test, mediated and conditioned by deeper causal layers.

Note that the variables in the actual, and sometimes even real, domains can be also measured and studied at times (just becoming empirical in certain research contexts). However, rarely does one study measure all variables that can be measured, thus in that study variables are actual or real (based on a critical realist perspective).1

Debiasing Strategies for Clinicians and Researchers

Teach Fallacies Explicitly: Integrate fallacy recognition into curricula.

Use Narrative Carefully: Teach how clinical stories can mislead as well as illuminate.

Check Against Hill’s Criteria: Use structured frameworks like Hill’s to assess whether associations might reflect true causation. While not definitive, they prompt attention to key dimensions:

Temporality: Did the cause precede the effect?

Strength & Consistency: Is the association robust and reproducible?

Biological Plausibility & Coherence: Does it fit with known mechanisms? If not, are the mechanisms realistic with what you do know?

Dose-Response: Does more exposure lead to greater effect?

Model Mechanisms: Ask not just if something works, but how and for whom.

Engage in Critical Realist Review: Evaluate evidence across empirical, actual, and real domains.

Normalize Uncertainty: Cultivate tolerance for ambiguity and promote probabilistic reasoning.

Conclusion

The final lesson in Stats4PT reveals that the greatest threat to scientific reasoning is not poor statistics, but seductive stories and cognitive illusions in studies and experiences. It is not enough to know how to calculate; we must know how not to believe too quickly.

Understanding and resisting illusions of causality is essential for truly evidence-based, and person-centered practice.

“Statistical thinking will one day be as necessary for efficient citizenship as the ability to read and write.” H.G. Wells

But it must be paired with epistemic humility and mechanistic insight.

Final Thoughts

Beyond Fallacy: When Belief Becomes Identity

“The lie is halfway around the world before the truth has its boots on.” — Attributed to Twain, Churchill, and a dozen others.

The point is clear: falsehood is fast, sticky, and socially contagious.

Statistical fallacies and cognitive biases are not the only threats to causal reasoning. Some errors persist not because people fail to understand the data, but because the data threatens a sense of self.

In The Liar’s Paradox, Jeremy Campbell explores how our age has made room for a new kind of falsehood. One not rooted in ignorance, but in the rejection of shared standards of truth. The line between fact and fiction blurs when truth becomes optional and falsehood becomes identity.

“What I encountered wasn’t disagreement, it was insulation.”

In teaching pseudoscience, I’ve seen beliefs treated not as hypotheses, but as expressions of personal truth. To question them is to violate a code, not to engage in inquiry.

We must therefore ask not only what makes reasoning flawed, but what makes it fragile. Vulnerable not to poor logic alone, but to social performance, cultural identity, and emotional allegiance.

This is where causal reasoning meets courage.

To teach it, model it, and live it, we must recognize that the most persistent fallacies are shields. Sometimes, to see clearly is to feel vulnerable.

In the development I’m working on there’s a bit more flexibility of variables between domains empirical, actual and real. Rather than fixed ontologies, where a variable exists depends on the epistemic context. I’m trying to avoid the epistemic fallacy with this approach by accepting that even if something cannot be measured now and is hence in the real domain, there may be a time in the future when it can be measured and therefore is empirical. So these domains, rather than fixed ongologies, have always been epistemological.