These are the books near my desk right now. They’re not just references. They’re part of the intellectual walk I’m taking into the depths of clinical inquiry.

There are clear connections between Moneyball and The Undoing Project, and they weren’t accidental. After writing Moneyball Michael Lewis realized he had told a version of Kahneman and Tversky’s story without knowing it. The Undoing Project became his corrective: a true prequel that acknowledges the psychological insights that underpinned the very logic of Moneyball—the cognitive biases that cloud judgment, and the predictive power of disciplined data over intuition.

This post is many things for Lesson 6: another explanation of the delay; an organization of thoughts; and a lead up. It’s another stopping point in my journey to write that lesson. For those of you walking me, we have to tread this topic very carefully. We’re not just learning statistics to calculate things. We’re studying how knowledge behaves under uncertainty—and what kind of clinician you must become to hold that responsibility with care.

Lesson 6: Beyond Data: Mechanisms and Structures in Evidence walks a fine line—one that’s common to the human experience and encountered daily. What’s the best decision to make?

Take the decision to wear a seat belt. Sometimes it’s made for you by law. Other times, like here in New Hampshire if you’re over 18, it’s left to you. Choosing to wear it consistently is a decision based on evidence—a decision informed by a model. Another way to put this: it’s an actuarial decision, a personal action guided by population-level statistics.

Of course, there are many individualized factors that influence your actual risk in a car—things that might shift your thinking. You might factor in mechanisms (like your car’s safety features), past experience, or even intuition. But the data-driven models suggest that, all things considered, it’s still the right thing to do.

There’s uncertainty: you don’t know if you’ll need the seat belt on this trip. And ironically, if you did know for certain you were going to be in a crash, you probably wouldn’t get in the car in the first place.

Lately, there’s been a lot of discussion about habits—good habits, bad habits. Good habits tend to lead to good outcomes. Bad habits, to worse ones. The power of a good habit is that the good decision becomes automatic. For me, driving locally might feel like a low-risk situation where a seat belt isn’t necessary. But I wear it anyway—because it reinforces the habit. So that on the day I need it, the decision has already been made.

Similarly, habits of good clinical decision making increase the likelihood that you’ll avoid slipping into bad ones. Lesson 6 is the first in a three-part sequence on causal modeling and statistical fallacies. It introduces the foundations for building a causal model in clinical research, and then leads into a lesson on statistical fallacies and biases—in both research and clinical judgment.

What it’s really doing is setting up the knife-edge we walk between decisions grounded in research, and those shaped by mechanisms, experience, and intuition.

In Moneyball (and yes, the book is better—but the movie works), the economic-statistical approach represents “research.” The scouts represent mechanisms, experience, and intuition. There are two errors we risk in contemplating that contrast: first, to create a false dilemma, assuming these approaches are mutually exclusive—they’re not. Second, to assume it’s easy to know when to trust one or the other, or how to blend them.

And that’s the real question: When do we deviate from the model?

“Only override the model if you have causal knowledge the model doesn’t.”

—Paul Meehl, 1973

Years ago, when I first read The Undoing Project, I came across the work of Paul Meehl. A psychologist who wrestled with many of the same issues we’re now wrestling with in physical therapy. His book, Clinical Versus Statistical Prediction: A Theoretical Analysis and a Review of the Evidence, did for psychology what nothing—yet—has done for our field.

I read it then as a kind of meta-meta-analysis: not just an analysis of data, but an analysis of whether meta-analysis itself works. And let’s remember, meta-analysis is a relatively recent method for synthesizing clinical effectiveness research.

At the time, I read it quickly. Only recently, in preparing for Lesson 6, did I go back and do a deeper dive. What Meehl shows, across decades of evidence, is that statistical prediction consistently outperforms clinical prediction over the long term. That when psychologists deviate from data-informed models—models built from aggregated, population-level outcomes—their decisions usually don’t pan out.

Of course, whether something “pans out” is itself subject to bias. Evaluating outcomes requires us to examine not just what happened, but how we interpret what happened. Meehl makes clear that bias in individual judgment—particularly when clinicians override statistical models—often leads to worse outcomes than the collective biases embedded in those models themselves. Or, put differently: individual intuitions are more error-prone than the compromises of group-derived data.

The common story is this:

In a sample of X patients, the best outcomes (on average) result from decision Z—whether that’s a treatment, referral, behavior change, or program. But this current patient wasn’t in that sample. They have factors A, B, and C that make them unique. The PT, too, brings skills D, E, and F into the equation. So they recommend deviating from Z.

Let’s face it—we’re all looking for the edge. That edge is what makes the plan feel individualized, precise, tailored. And of course, no patient wants to be treated like a data point. They don’t want their care based only on what’s best for most people. They want what’s best for them.

This desire for particularity isn’t the problem. The problem is knowing how to reason well about it.

From my vantage point, the tipping point in our profession’s epistemology came on September 22, 1994. I was on my final clinical rotation at Mass General Hospital when The Wall Street Journal published an article titled One Bum Knee Meets Five Physical Therapists (Miller, L., 1994). It caused such a stir that it became a topic at our next staff meeting. Dr. Andrew Guccione, then Director of Physical Therapy at MGH, led that conversation. And for something to come up at an MGH staff meeting under Guccione—it meant it mattered. It had traction.

Up to that point, I had been told I was pursuing an M.S. in Physical Therapy—due to a shift in the profession from the more traditional B.S.—because the profession needed to “do more research.” But what was meant by research at that time was something quite specific: mechanistic research, in service of improving mechanistic reasoning. It wasn’t yet about outcomes, or statistical prediction. It was about understanding how things work, so that practice could follow logic.

That Wall Street Journal article catalyzed a movement. The Orthopaedic Section began organizing clinical practice guidelines. The profession as a whole began to shift toward “outcomes-based evidence”—what we now call “research.” But before that moment—and I remember this from my years as a student—research meant something else. It was any inquiry into mechanisms that could inform practice. The shift to outcomes framed my experience as a new graduate at the APTA Mass Chapter conference in 1995, and it’s why I was invited to the APTA as part of an “Evidence Based Practice Dissemination” workshop in 2009. The chain of reasoning ran: mechanisms → inferences → outcomes→decisions so we could simply start with and study outcomes and make reasonable clinical decisions.

In the last update, I explained why the next Stats4PT lesson is taking longer than expected. I called it a delay of fidelity—not productivity. If you’ve been through Clinical Inquiry with me, you already know: we’re not just learning to calculate. We’re learning to reason. We’re building epistemic habits. And sometimes, those habits demand we slow down.

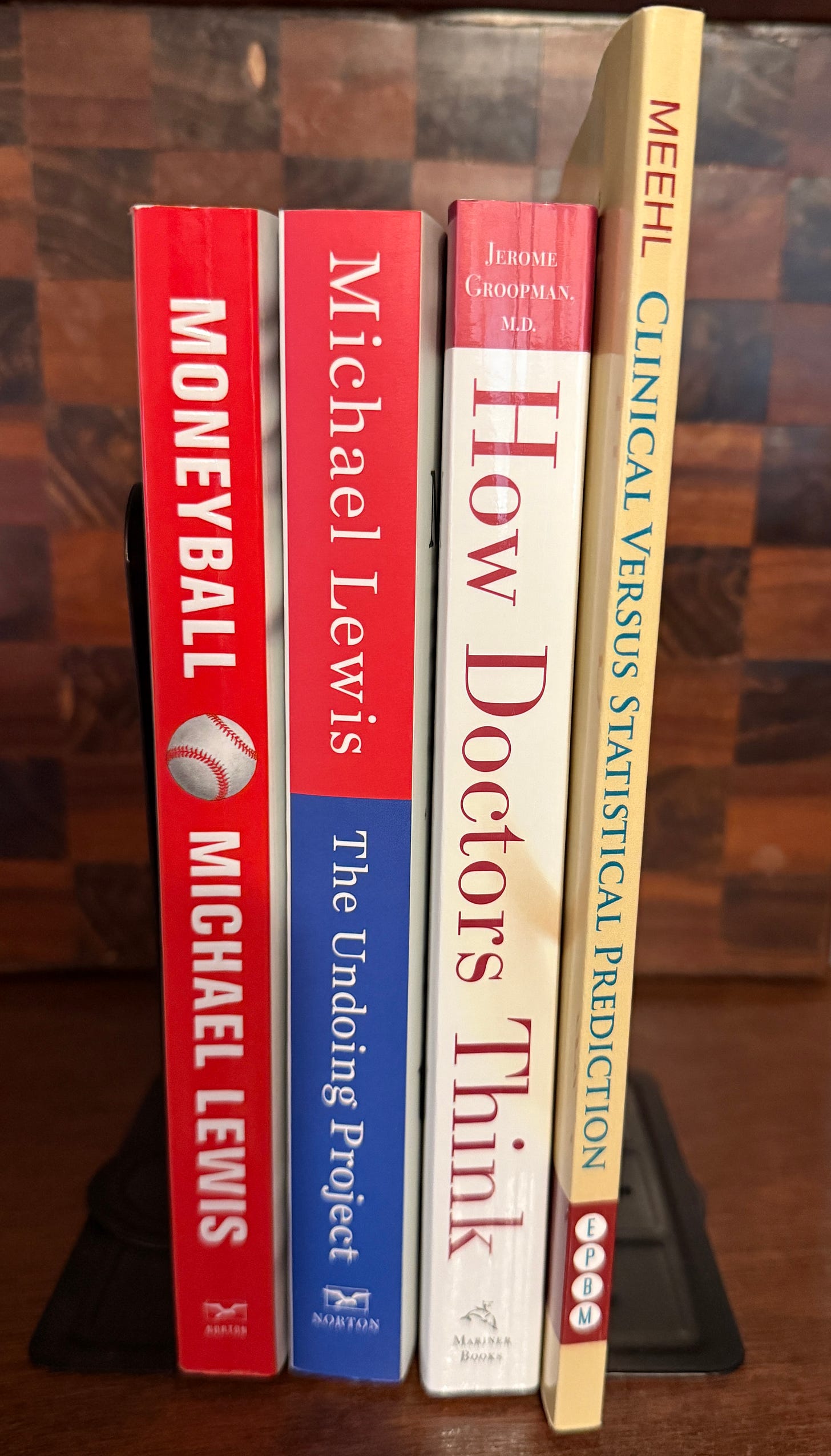

So I wanted to share what I’ve been rereading and reworking. Because these books—Moneyball, The Undoing Project, How Doctors Think, and Meehl’s Clinical vs. Statistical Prediction—all converge on the same question:

How do we reason about uncertainty?

When is it rational to follow population data?

And when is it responsible to deviate—for the sake of the unique patient?

That’s what the next lesson is about.

That’s why I’m taking my time.

Moneyball: Numbers Over Narratives?

You know the setup: undervalued baseball players, outdated and biased scouts, a data-driven GM who bet on on-base percentage (OBP) instead of traditional gut instincts.

Mechanisms could be likened to observing the “five tools” of a good baseball player:

“He’s a five-tool guy. He can run, he can field, he’s got a great arm, he can hit, and he can hit with power.”

Intuition comes across as almost pure bias:

“He’s got the look. He’s a ballplayer.”

“He’s got a great body. Big, raw, the ball explodes off his bat. He throws the club head at the ball, and when he connects, he drives it—it’s gone. He’s got the body you dream about.”

“He passes the eye test.”

Experience comes across as thinking what they’ve observed in the past will continue to happen, despite the role of bias in their interpretations of what they think they have observed in the past:

“He’s got an ugly girlfriend. That means he’s got no confidence.”

“He’s got a hitch in his swing. It’s a problem.”

“He’s got a bad body.”

Billy Beane is essentially playing Meehl’s hand—trusting population-level prediction over anecdotal expertise.

About the anecdotal use of “tools” to assess someone without looking at their actual performance:

“If he’s a good hitter, why doesn’t he hit good?”

— Billy Beane, Moneyball (2011)

About the biases of player appearances:

“We’re not selling jeans here.”

— Billy Beane, Moneyball (2011)

In the movie Billy brings on Peter Brand who reframes performance evaluation from intuition and observation to quantifiable causal reasoning:

“Your goal shouldn’t be to buy players. Your goal should be to buy wins. And in order to buy wins, you need to buy runs. In order to buy runs, you need to get on base.”

— Peter Brand, Moneyball (2011) (fictional name for Paul DePodesta)

This is what makes OBP in Moneyball more than just a sabermetric trend—it’s a model of mechanistic + empirical reasoning, and here’s how that breaks down:

Runs cause wins.

On-base events cause runs.

Therefore, on-base percentage is undervalued causal leverage.

Billy Beane is capitalizing on the causal relationship that we can understand based on mechanisms, and that has been demonstrated empirically. There is a demonstrable, statistical relationship between a team’s on-base percentage and its win total. And we are ignoring it because of aesthetic bias and bad tradition.

It works. Mostly.

The movie does share a caveat - it’s subtle but present. Jeremy Giambi—a statistically smart pick whose off-field behavior which was raised as a concern by scouts undermines the model’s predictive value. His story reminds us of what models often miss: unmeasured, real-domain causes that influence outcomes.

That tension—between the empirical and the real—is critical for us.

The Undoing Project: Bias in the Mind’s Eye

Michael Lewis tells the story of Kahneman and Tversky, who mapped the biases that shape expert judgment. We now teach their findings as cautionary tales: base rate neglect, overconfidence, availability heuristics.

Their work explains why clinicians make the errors Meehl catalogued in Why I Do Not Attend Case Conferences. Meehl wasn’t just irritated by low standards—he diagnosed a system that failed to engage in structured reasoning, that treated anecdote and statistical evidence as epistemic equals, and that rewarded participation over truth-seeking.

One of Meehl’s most devastating critiques is that clinicians often think and argue in ways that violate basic statistical logic—ignoring base rates, overinterpreting unreliable observations, and failing to distinguish differential diagnostic value from general consistency. He wrote:

“The vulgar error is the cliché that ‘We aren’t dealing with groups, we are dealing with this individual case.’ … Such departures are, prima facie, counterinductive.”

— Meehl, 1973

In other words, clinicians often feel justified in overriding population-level data, but without a coherent mechanistic reason to do so. Kahneman and Tversky helped us name the cognitive traps that lead to this error; Meehl demanded we not indulge them without high epistemic standards.

Can we fully correct for bias with data alone? Or do we need better models of mechanism—ones that explain why a certain pattern holds, and when it fails?

This is the deep convergence point between Meehl, Lewis, and the critical realism - graphical causal model work we’re building - I’m leaning into this hypothesis:

Bias tells us what not to trust.

Statistics tell us what tends to be true.

Mechanism tells us when it’s rational to deviate.

And I’m partly held up trying to think of ways to verify that hypothesis.

Isn’t it ironic?

“Life has a funny way of sneaking up on you, when you think everything’s okay…”

— Alanis Morissette

How Doctors Think: The Return of the Patient

Groopman turns back toward the human. He doesn’t reject data—but he’s alert to the cost of over-correction. He shows us what happens when clinical presence is replaced by prediction rules, when the person is abstracted into a probability.

This isn’t anti-science—it’s a warning:

When we over-index on the empirical domain, we may see data but miss causality.

We may predict outcomes without understanding how they emerge.

This is where Bhaskar’s stratified ontology becomes essential:

The Empirical: what we can observe—signs, symptoms, test results.

The Actual: what happens—whether or not it’s observed.

The Real: the underlying structures, mechanisms, and powers that generate events.

Groopman is reminding us not to skip the last domain. And in doing so, he resonates directly with the insights from “Rethinking Causality, Complexity and the Unique Patient”:

“We need a model of causation that takes account of non-linearity, emergence, and unique contextual constellations.”

— Greenhalgh, et al.

That work challenges the Humean regularity theory of causation (cause = correlation), insisting instead on a dispositional view:

Causes are not if-then triggers, but tendencies within systems.

Outcomes are not determined, but emerge from the interaction of mechanisms within context.

In clinical reasoning, that means:

The same treatment may not work in two seemingly similar patients.

A “low probability” outcome may still emerge—because a dormant mechanism became active.

Meehl: Trust the Data (Until You Shouldn’t)

Meehl’s work is the linchpin. He showed that in psychology, with exhaustive clarity, that population (actuarial) models consistently outperform clinicians in predictive tasks—whether in schizophrenia prognosis, parole decisions, or student success. His lesson wasn’t anti-clinician. It was anti-sloppiness.

“Don’t deviate from the model because you feel like it. Deviation is only rational when it’s grounded in causal knowledge that isn’t captured in the stats.”

-Meehl (1973)1

In Clinical Inquiry terms: this is when your critical realist graphical causal model justifies action beyond the prediction rule. This is where reasoning becomes scholarly, not just experiential.

But what made Meehl’s 1973 paper so enduring is his unsparing dissection of the kinds of errors that creep into expert judgment—especially in group settings like case conferences. His critique reads like a diagnostic manual of epistemic malpractice.

Meehl’s Warnings from Why I Do Not Attend Case Conferences

Base Rate Neglect

“It is a statistical fact that most clinicians do not know or do not use the base rates of conditions in judging cases.”

Clinicians ignore how common or rare a condition is when making diagnoses. In PT, this could mean over-interpreting rare causes for common symptoms.

Confusing Diagnostic Consistency with Discrimination

“The fact that a symptom is consistent with a diagnosis tells you very little. The question is: how discriminating is it?”

Meehl emphasizes that a finding being plausible doesn’t mean it’s helpful. This error shows up when PTs rely on findings like “weak glutes” that show up in almost everyone.

Ignoring Validity for Vividness

“Anecdotal vividness is treated as if it were diagnostic value.”

This is the classic undoing project problem: we remember emotionally compelling stories, not statistically significant patterns.

Overconfidence in Experience

“The clinician may say, ‘But I’ve seen this many times.’ But no one checks whether that experience tracks with outcome data.”

Meehl’s critique here anticipates CIF’s project: distinguishing between pattern recognition and mechanism-based causal reasoning.

Meehl → CIF: From Psychotherapy to Physical Therapy

What Meehl was trying to do in psychiatry and psychotherapy—restore epistemic discipline to clinical reasoning—is precisely what we’re doing in physical therapy. We’re asking:

When can you trust a general rule?

When must you explain an outlier?

And how do you know the difference?

His call was simple but demanding:

Don’t just think. Think well. Think with structure. And above all, don’t confuse insight with inference.

That’s the spirit behind these posts and Stats4PT lessons. That’s what makes the CIF more than case discussion. It makes it a rigorous, honest attempt to rebuild the epistemology of clinical care—from the inside out.

Why the Stats4PT Lesson Takes Time

Because this isn’t just about data—or even about bias. It’s about building a form of clinical reasoning that can hold complexity without collapsing into either overconfidence or indecision.

Michael Lewis gave us two lenses: Moneyball, where we learned to trust the undervalued patterns in data, and The Undoing Project, where we learned to question the ways our minds make sense of uncertainty. Both stories point toward a problem—but also a possibility.

Groopman reminded us not to let prediction replace presence. To be with patients in ways that respect both the evidence and the human complexity that lives beneath it. His call is for wisdom, not just knowledge.

And Meehl—quietly and rigorously—showed us that precision matters. That if you’re going to override the model, you’d better have a reason grounded in causal structure, not just intuition. Not because human judgment is worthless, but because it’s not always accountable to the truth.

So yes—this lesson is taking time. Because what we’re trying to model in clinical inquiry is not just a technique. It’s a stance:

Humble before the data.

Curious about what mechanisms the model might be missing.

Clear-eyed about the biases that shape how we think.

It’s not about choosing one way to reason—it’s about knowing when and why to trust each way. That’s not a skill you rush. It’s a habit you build.

Thanks for your patience—and your company on this walk!

Afterward

Writing this post reminded me of a paradox that surfaced in conversation with my wife, after we rewatched Moneyball—as I considered assigning it in my Clinical Inquiry I course this Fall (actually watching it in class). She asked, reasonably: “Why have them watch the whole movie with your commentary when you could just tell them what it means?”

And she’s not wrong. It’s tempting to skip the story and go straight to the takeaway.

But that exchange reminded me of something we all experience as educators: the irony that we often learn best through rich, emotionally charged, true stories as a reflection of truly lived experience—the kind that embed knowledge in context, emotion, and memory. And yet, when we’re in the moment of receiving that story, we can find ourselves wanting to just be told the point.

We crave the clarity of a summary, even when it’s the texture of the narrative that actually helps the learning stick.

It’s not unlike why the Bible is composed as a tapestry of genres—stories, poetry, parables, genealogies—not simply a list of instructions. The form is part of the formation. It’s designed to shape people, not just inform them. And while I’m certainly not comparing Moneyball to Scripture in any theological sense, I do think it shares something of the pedagogical pattern: stories help us wrestle with ideas more deeply than summaries ever can.

So yes—my students could just hear “trust the data over intuition.” But watching Moneyball lets them feel that tension, wrestle with it, and see its stakes. And that, I think, is what good teaching—and good inquiry—should do.

Why I Don’t Attend Case Conferences. P. E Meehl (1973) Psychodiagnosis Selected papers (pp. 225-302, Chapter 13). Minneapolis: University of Minnesota Press.

Great thoughts and take your time, the upcoming content sounds exciting to get into.

I’m glad you elaborated on the Meehl article about statistical prediction being superior to clinical judgement. It’s convincing that this occurs so consistently across diverse conditions. I read it a few weeks ago and really forgot about it and then read it again. There were 10 examples he used in the 1993 publication (predicting MI, academic success, police termination, etc.) and I’m sure there has been a lot more published since. I think that physical therapy has been trying to capitalize on this kind of thinking given the number of clinical prediction rules that are out there however a lot of them have just been in the derivation stage and I’ve been skeptical to apply them. I really trust the diagnostic CPRs but the ones that forecast a response to a certain intervention make me dubious. The past few months has made it easier to appraise how useful they can be.

Here are some examples that I thought about …

Using ROM to predict full recovery following rotator cuff repair

Tonotsuka H, Sugaya H, Takahashi N, Kawai N, Sugiyama H, Marumo K. Target range of motion at 3 months after arthroscopic rotator cuff repair and its effect on the final outcome. Journal of Orthopaedic Surgery. 2017;25(3)

Researchers identified that having at least 120 degrees of forward flexion and at least 20 degrees of ER was predictive of full recovery as defined by not having a re-tear. I remember reading this and thinking that 120 degrees at 13 weeks is pretty limited but patients followed for 2 years that obtained this range at the 3-month mark did well.

Return to work following a back injury

Wynne-Jones G, Cowen J, Jordan JL, Uthman O, Main CJ, Glozier N, van der Windt D. Absence from work and return to work in people with back pain: a systematic review and meta-analysis. Occup Environ Med. 2014 Jun;71(6):448-56

I couldn’t find the original publication but I’ve repeatedly read the statistics on how the duration of missed work following a back injury is associated with a higher likelihood of delayed return to work. If an individual doesn’t return to work after two years, there is a very high chance of them never working again.

Return to sport battery (quadriceps index, hop test scores, knee specific outcome tests)

Plenty of articles here in prediction of graft failure or second injury after ACLr.

I think there are some areas in PT practice where statistical prediction is excellent and the data to draw from is rich and there are of course other areas where we have more uncertainty. It reminds me of Schön’s description of “technical rationality” vs. “muddling” in professional practice. The first part of his book the Reflective Practitioner is fantastic where he explores these two processes. He describes technical rationality as how professionals apply established theories, research and techniques to solve well defined problems. He uses the analogy of practicing on the high, hard ground. Treating BPPV comes to mind here. He then describes a concept of muddling through difficult concepts where professionals navigate messier, ill-defined problems. He uses the analogy of practicing on a muddy swamp. Managing nonspecific LBP comes to mind here.

I drew a picture to describe this but I can't upload it into Substack comments, I'll try another way if anyone is interested :)